This is an old revision of the document!

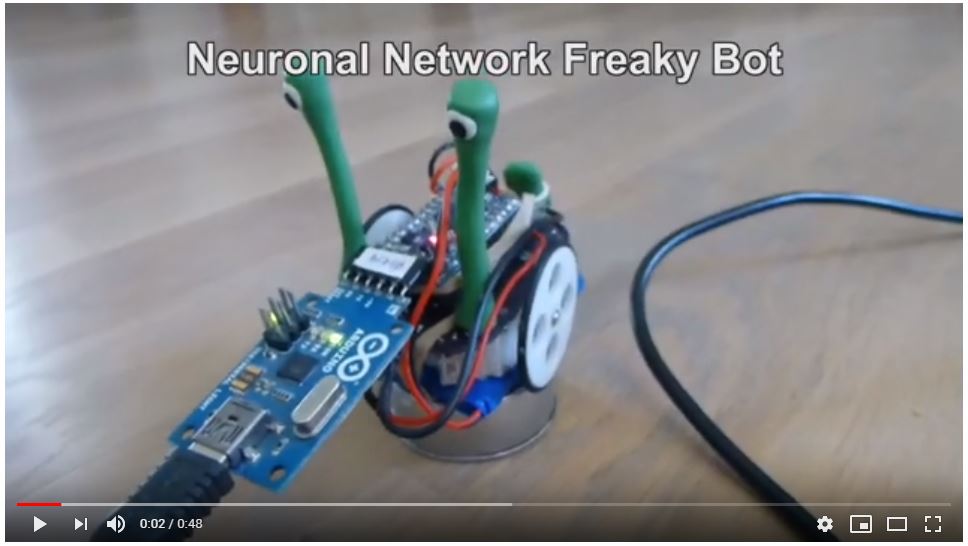

Neuronal Network Bot

Hier eine kleine Vorstellung von einem sehr einfachen Neuronalen Netzwerk. Damit kann der der Freaky Bot v5 mit ganz wenigen Lerndaten das fahren hinter einem Objekt sich selber beibringen.

Als Basis habe ich die Arbeit von Ralph Heymsfeld (http://robotics.hobbizine.com/arduinoann.html) genommen und auf den Freaky Bot v5 angepasst.

Das ganze funktioniert folgendermassen. Im Coding werden dem Bot Lerndaten mitgegeben. Es sind ganz wenige Lerndaten, im Prinzip nur die Extreme. Beim der “Follower” Funktion wo der Bot einem Objekt nachfahren muss habe ich nur diese Extreme definiert:

- No Obstactle

- Obstacle on left

- Obstacle on right

- Obstacle left and right

- Obstacle left and right close

Als Input habe ich zwei Sensoren also zwei Werte. Als Output habe ich 2 Motoren also auch zwei Werte. Gültige Werte sind von 0 bis 1. Dabei habe ich folgendes definiert:

- Sensoren: 1 No Obstacle, 0 Obstacle close

- Motoren: 1 Motor forward, 0.5 Motor Stop

Daraus ergeben sich folgende Eingabe und Ausgabe Extreme:

Eingabe:

{ 1, 1 }, // No Obstactle

{ 0.6, 1 }, // Obstacle on left

{ 1, 0.6 }, // Obstacle on right

{ 0.6, 0.6 }, // Obstacle left and right

{ 0.3, 0.3 }, // Obstacle left and right close

Ausgabe:

{ 1, 1 }, // No Obstactle

{ 1, 0.2 }, // Obstacle on left

{ 0.2, 1 }, // Obstacle on right

{ 0.7, 0.7 }, // Obstacle left and right

{ 0.5, 0.5 }, // Obstacle left and right close

Das habe ich natürlich etwas mit verschiedenen Versuchen ausprobiert.

Diese primitiven Lerndaten reichen jedoch schon um ein Neuroales Netzwerk zu trainieren, welches dann zum sanfte Fahren genutzt werden kanne. Dabei liefert das Netzwerk auch für die Zwischenwerte bei den Sensoren Zwischenwerte für die Motoren.

Folgende Definitionen vom Neuronalen Netzwerk müssen auch noch festgelegt werden. Damit kann dann auch etwas gespielt werden.

const int PatternCount = 5; // The number of training items or rows in the truth table const int InputNodes = 2; // The number of input neurons const int OutputNodes = 2; // The number of output neurons const int HiddenNodes = 5; // The number of hidden neurons const float LearningRate = 0.3; // The number of hidden neurons const float Momentum = 0.9; // Adjusts how much the results of the previous iteration affect the current iteration const float InitialWeightMax = 0.5; // Sets the maximum starting value for weights. const float Success = 0.02; // Level of minimum Success

Meistens schaft der Arduino es in ca. 350 Trainings auf die definierte Genauigkeit von 0.02 zu kommen. Was für mich immer genug war.

So sieht ein Trainings Lauf aus. Er läuft immer alle 5 Lerndaten durch und gibt eine Fehlerrate aus:

Training Pattern: 0 Input: 1.00 1.00 Target: 1.00 1.00 Output 0.23 0.58 Training Pattern: 1 Input: 0.60 1.00 Target: 1.00 0.20 Output 0.23 0.59 Training Pattern: 2 Input: 1.00 0.60 Target: 0.20 1.00 Output 0.23 0.58 Training Pattern: 3 Input: 0.60 0.60 Target: 0.70 0.70 Output 0.23 0.58 Training Pattern: 4 Input: 0.30 0.30 Target: 0.50 0.50 Output 0.23 0.58 TrainingCycle: 1 Error: 0.96

Wenn die Fehlerrate kleiner ist als gewünscht folgt der nächste Schritt: Jetzt werden vordefinierte Sensorwerte durch das Neuronale Netzwerk geführt und das Ergebnis wird ausgegeben. Hier seht Ihr in der ersten Zeile ein Beispiel. Der Sensor Rechts (R IR) steht auf 0.4 das heisst, da ist ein Objekt in der Nähe und der Sensor Links steht auf 0.9. Links ist das Objekt also weiter weg. Die Motoren werden jetzt unterschiedlich angesteuert. Der Rechte Motor mit 0.98 das ist fast Vollgas und der Linke Motor mit 0.11 das ist mehr als nur stop.

Test Input: R IR: 0.40 L IR: 0.90 Output Nodes : 0.98 : 0.11 Out R Mot: 0.98 L Mot: 0.11 Test Input: R IR: 0.90 L IR: 0.90 Output Nodes : 0.85 : 0.86 Out R Mot: 0.85 L Mot: 0.86 Test Input: R IR: 0.70 L IR: 0.30 Output Nodes : 0.15 : 0.96 Out R Mot: 0.15 L Mot: 0.96

Jetzt folgt das wirkliche fahren. Dazu gibt der tBot auch noch ein Protokoll aus, das ist jedoch etwas Kryptisch.

Go to run bot: R IR: 122 L IR: 78 - IN R FL IR: 0.74 L FL IR: 0.59 Output Nodes : 0.89 : 0.54 NN Out R Mot: 0.89 L Mot: 0.54 - Set Speed R MOT: 166 L MOT: 60 R IR: 88 L IR: 84 - IN R FL IR: 0.63 L FL IR: 0.61 Output Nodes : 0.74 : 0.72 NN Out R Mot: 0.74 L Mot: 0.72 - Set Speed R MOT: 120 L MOT: 115 R IR: 96 L IR: 98 - IN R FL IR: 0.65 L FL IR: 0.66 Output Nodes : 0.73 : 0.76 NN Out R Mot: 0.73 L Mot: 0.76 - Set Speed R MOT: 118 L MOT: 129 R IR: 100 L IR: 80 - IN R FL IR: 0.67 L FL IR: 0.60 Output Nodes : 0.81 : 0.65 NN Out R Mot: 0.81 L Mot: 0.65 - Set Speed R MOT: 142 L MOT: 93

Hier auch schon mal der komplette Code:

/******************************************************************************

* Dinoi Follower Neuronal Network Version Arduino Mini Pro 5V

*

* Follower Neuronal Network

*

* Arduino NN - An artificial neural network for the Arduino

*

* Based on Neuronal Network from robotics.hobbizine.com/arduinoann.html

******************************************************************************/

#include <math.h>

//*******************************************

// Smart Debug

//*******************************************

// Debugging einschalten, zum ausschalten auskommentieren

#define _SMARTDEBUG

// Debug Makros

#ifdef _SMARTDEBUG

// Hilfsfunktion für WAIT - Makro

void DebugWait(String txt) {

// buffer leeren

char ch;

while (Serial.available()) ch = Serial.read();

ch = 0;

Serial.print(txt);

Serial.println(" >press 'c' to continue...");

// auf 'c' warten

do {

if (Serial.available() > 0) ch = Serial.read();

} while (ch != 'c');

// buffer leeren

while (Serial.available()) ch = Serial.read();

}

#define DEBUG_INIT(speed) Serial.begin(speed)

#define DEBUG_PRINTLN(txt) Serial.println(txt)

#define DEBUG_PRINT(txt) Serial.print(txt)

#define DEBUG_PRINTLN_VALUE(txt, val) Serial.print(txt); Serial.print(": "); Serial.println(val)

#define DEBUG_PRINT_VALUE(txt, val) Serial.print(txt); Serial.print(": "); Serial.print(val)

#define DEBUG_WAIT(txt, condition) if (condition) DebugWait(txt)

#else

#define DEBUG_INIT(speed)

#define DEBUG_PRINT(txt)

#define DEBUG_PRINTLN(txt)

#define DEBUG_PRINT_VALUE(txt, val)

#define DEBUG_PRINTLN_VALUE(txt, val)

#define DEBUG_WAIT(txt, condition)

#endif

/******************************************************************************

/* Robo Pin Configuration

/******************************************************************************/

#define MDL 4 // Motor Direction Left

#define MSL 5 // Motor Speed Left

#define MSR 6 // Motor Speed Right

#define MDR 7 // Motor Direction Right

int IrLedVr = 3;

int IrLedVl = 2;

int IrLedHr = 10;

int IrLedHl = 9;

int IrRecVr = A3;

int IrRecVl = A2;

int IrRecHr = A0;

int IrRecHl = A1;

const int ledPin = 13; // the number of the LED pin

#define DIST 65 // Define Minimum Distance

#define MTRF 255 // Motor Topspeed Right Forward

#define MTLF 255 // Motor Topspeed Left Forward

#define MTRB 255 // Motor Topspeed Right Backward

#define MTLB 255 // Motor Topspeed Left Backward

// Variables

int lspeed = 0; // current speed of left motor

int rspeed = 0; // current speed of right motor

int spause = 1; // wait time if a whisker was touched

long delay_time = 0; // time when pause was triggered

int ledState = LOW; // ledState used to set the LED

long previousMillis = 0; // will store last time LED was updated

long interval = 5000; // interval at which to blink (milliseconds)

bool protsta = false;

bool motsta = true;

/******************************************************************

* Network Configuration - customized per network

******************************************************************/

// Logic

// Sensor -255 to 255

// Motor -255 to 255

// Normal forward => Sensor 150 => Motor 150

// Obstacle => Sensor 80 => 80

const int PatternCount = 5; // The number of training items or rows in the truth table

const int InputNodes = 2; // The number of input neurons

const int OutputNodes = 2; // The number of output neurons

const int HiddenNodes = 5; // The number of hidden neurons

const float LearningRate = 0.3; // The number of hidden neurons

const float Momentum = 0.9; // Adjusts how much the results of the previous iteration affect the current iteration

const float InitialWeightMax = 0.5; // Sets the maximum starting value for weights.

const float Success = 0.02; // Level of minimum Success

float Input[PatternCount][InputNodes] = {

{ 1, 1 }, // No Obstactle

{ 0.6, 1 }, // Obstacle on left

{ 1, 0.6 }, // Obstacle on right

{ 0.6, 0.6 }, // Obstacle left and right

{ 0.3, 0.3 }, // Obstacle left and right close

};

const float Target[PatternCount][OutputNodes] = {

{ 1, 1 }, // No Obstactle

{ 1, 0.2 }, // Obstacle on left

{ 0.2, 1 }, // Obstacle on right

{ 0.7, 0.7 }, // Obstacle left and right

{ 0.5, 0.5 }, // Obstacle left and right close

};

/******************************************************************

* Network Variables

******************************************************************/

char mode; // (t => train,c=>check, r => run)

int i, j, p, q, r;

int ReportEvery1000;

int RandomizedIndex[PatternCount];

long TrainingCycle;

float Rando;

float Error;

float Accum;

float Hidden[HiddenNodes];

float Output[OutputNodes];

float HiddenWeights[InputNodes+1][HiddenNodes];

float OutputWeights[HiddenNodes+1][OutputNodes];

float HiddenDelta[HiddenNodes];

float OutputDelta[OutputNodes];

float ChangeHiddenWeights[InputNodes+1][HiddenNodes];

float ChangeOutputWeights[HiddenNodes+1][OutputNodes];

/******************************************************************************

/* Setup

/******************************************************************************/

void setup(){

// Neuronal Setup

/******************************************************************************/

randomSeed(analogRead(3));

ReportEvery1000 = 1;

for( p = 0 ; p < PatternCount ; p++ ) {

RandomizedIndex[p] = p ;

}

delay(1000);

mode = 't';

// Robo motors Setup

/******************************************************************************/

pinMode (MDL, OUTPUT);

pinMode (MSL, OUTPUT);

pinMode (MSR, OUTPUT);

pinMode (MDR, OUTPUT);

// all IR Leds / Recevier

pinMode(ledPin, OUTPUT);

pinMode(IrLedVr, OUTPUT);

pinMode(IrLedVl, OUTPUT);

pinMode(IrLedHr, OUTPUT);

pinMode(IrLedHl, OUTPUT);

pinMode(IrRecVr, INPUT);

pinMode(IrRecVl, INPUT);

pinMode(IrRecHr, INPUT);

pinMode(IrRecHl, INPUT);

// Serial Debug Interface

DEBUG_INIT(57600);

DEBUG_PRINTLN("Robot Starting");

digitalWrite(ledPin, HIGH);

delay(200);

digitalWrite(ledPin, LOW);

delay(200);

digitalWrite(ledPin, HIGH);

delay(200);

digitalWrite(ledPin, LOW);

delay(200);

digitalWrite(ledPin, HIGH);

delay(200);

digitalWrite(ledPin, LOW);

delay(200);

digitalWrite(ledPin, HIGH);

delay(200);

digitalWrite(ledPin, LOW);

delay(200);

}

/******************************************************************************

/* Loop

/******************************************************************************/

void loop (){

// Train NN

/******************************************************************************/

if ( mode == 't' ){

train_nn();

}

// Check NN

/******************************************************************************/

if ( mode == 'c' ){

check_nn();

}

// Run NN

/******************************************************************************/

if ( mode == 'r' ){

run_nn();

}

}

/******************************************************************************

/* Run NN

/******************************************************************************/

void run_nn(){

delay(30);

// Get IR Values

rspeed = irRead(IrRecVr,IrLedVr,'d') * 2;

lspeed = irRead(IrRecVl,IrLedVl,'d') * 2;

DEBUG_PRINT_VALUE("R IR",rspeed);

DEBUG_PRINT_VALUE(" L IR",lspeed);

// IR from -100 to +200

rspeed = constrain(rspeed,-100,200);

lspeed = constrain(lspeed,-100,200);

// Convert IR to float from 0 to 1

float f_rspeed = rspeed;

float f_lspeed = lspeed;;

f_lspeed = ( 100 + f_lspeed ) / 300;

f_rspeed = ( 100 + f_rspeed ) / 300;

DEBUG_PRINT_VALUE(" - IN R FL IR",f_rspeed);

DEBUG_PRINT_VALUE(" L FL IR",f_lspeed);

// Ask solution from NN

InputToOutput(f_lspeed, f_rspeed); //INPUT to ANN to obtain OUTPUT

DEBUG_PRINT_VALUE(" NN Out R Mot",Output[0]);

DEBUG_PRINT_VALUE(" L Mot",Output[1]);

rspeed = Output[0] * 300 - 100;

lspeed = Output[1] * 300 - 100;

DEBUG_PRINT_VALUE(" - Set Speed R MOT",rspeed);

DEBUG_PRINTLN_VALUE(" L MOT",lspeed);

// Drive Motors

drive(lspeed,rspeed);

}

/******************************************************************************

/* Check NN

/******************************************************************************/

void check_nn(){

float TestInput[] = {0.9, 0.9};

DEBUG_PRINTLN("");

DEBUG_PRINTLN("Test Run:");

// Test 1

//********

DEBUG_PRINT_VALUE("Test Input: R IR",TestInput[0]);

DEBUG_PRINT_VALUE(" L IR",TestInput[1]);

InputToOutput(TestInput[0], TestInput[1]); //INPUT to ANN to obtain OUTPUT

DEBUG_PRINT_VALUE(" Out R Mot",Output[0]);

DEBUG_PRINTLN_VALUE(" L Mot",Output[1]);

// Test 2

//********

TestInput[0] = 0.4;

TestInput[1] = 0.9;

DEBUG_PRINT_VALUE("Test Input: R IR",TestInput[0]);

DEBUG_PRINT_VALUE(" L IR",TestInput[1]);

InputToOutput(TestInput[0], TestInput[1]); //INPUT to ANN to obtain OUTPUT

DEBUG_PRINT_VALUE(" Out R Mot",Output[0]);

DEBUG_PRINTLN_VALUE(" L Mot",Output[1]);

// Test 2

//********

TestInput[0] = 0.7;

TestInput[1] = 0.3;

DEBUG_PRINT_VALUE("Test Input: R IR",TestInput[0]);

DEBUG_PRINT_VALUE(" L IR",TestInput[1]);

InputToOutput(TestInput[0], TestInput[1]); //INPUT to ANN to obtain OUTPUT

DEBUG_PRINT_VALUE(" Out R Mot",Output[0]);

DEBUG_PRINTLN_VALUE(" L Mot",Output[1]);

// go to run

mode = 'r';

DEBUG_PRINTLN("Go to run bot:");

}

/******************************************************************************

/* InputToOutput

/******************************************************************************/

void InputToOutput(float In1, float In2)

{

float TestInput[] = {0, 0};

TestInput[0] = In1;

TestInput[1] = In2;

/******************************************************************

Compute hidden layer activations

******************************************************************/

for ( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = HiddenWeights[InputNodes][i] ;

for ( j = 0 ; j < InputNodes ; j++ ) {

Accum += TestInput[j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0 / (1.0 + exp(-Accum)) ;

}

/******************************************************************

Compute output layer activations and calculate errors

******************************************************************/

for ( i = 0 ; i < OutputNodes ; i++ ) {

Accum = OutputWeights[HiddenNodes][i] ;

for ( j = 0 ; j < HiddenNodes ; j++ ) {

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0 / (1.0 + exp(-Accum)) ;

}

DEBUG_PRINT(" Output Nodes");

for ( i = 0 ; i < OutputNodes ; i++ ) {

DEBUG_PRINT_VALUE(" ",Output[i]);

}

}

/******************************************************************************

/* Train NN

/******************************************************************************/

void train_nn(){

/******************************************************************

* Initialize HiddenWeights and ChangeHiddenWeights

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

for( j = 0 ; j <= InputNodes ; j++ ) {

ChangeHiddenWeights[j][i] = 0.0 ;

Rando = float(random(100))/100;

HiddenWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

/******************************************************************

* Initialize OutputWeights and ChangeOutputWeights

******************************************************************/

for( i = 0 ; i < OutputNodes ; i ++ ) {

for( j = 0 ; j <= HiddenNodes ; j++ ) {

ChangeOutputWeights[j][i] = 0.0 ;

Rando = float(random(100))/100;

OutputWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

DEBUG_PRINTLN("Initial/Untrained Outputs: ");

toTerminal();

/******************************************************************

* Begin training

******************************************************************/

for( TrainingCycle = 1 ; TrainingCycle < 2147483647 ; TrainingCycle++) {

/******************************************************************

* Randomize order of training patterns

******************************************************************/

for( p = 0 ; p < PatternCount ; p++) {

q = random(PatternCount);

r = RandomizedIndex[p] ;

RandomizedIndex[p] = RandomizedIndex[q] ;

RandomizedIndex[q] = r ;

}

Error = 0.0 ;

/******************************************************************

* Cycle through each training pattern in the randomized order

******************************************************************/

for( q = 0 ; q < PatternCount ; q++ ) {

p = RandomizedIndex[q];

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = HiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

Accum += Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i < OutputNodes ; i++ ) {

Accum = OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0/(1.0 + exp(-Accum)) ;

OutputDelta[i] = (Target[p][i] - Output[i]) * Output[i] * (1.0 - Output[i]) ;

Error += 0.5 * (Target[p][i] - Output[i]) * (Target[p][i] - Output[i]) ;

}

/******************************************************************

* Backpropagate errors to hidden layer

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = 0.0 ;

for( j = 0 ; j < OutputNodes ; j++ ) {

Accum += OutputWeights[i][j] * OutputDelta[j] ;

}

HiddenDelta[i] = Accum * Hidden[i] * (1.0 - Hidden[i]) ;

}

/******************************************************************

* Update Inner-->Hidden Weights

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

ChangeHiddenWeights[InputNodes][i] = LearningRate * HiddenDelta[i] + Momentum * ChangeHiddenWeights[InputNodes][i] ;

HiddenWeights[InputNodes][i] += ChangeHiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

ChangeHiddenWeights[j][i] = LearningRate * Input[p][j] * HiddenDelta[i] + Momentum * ChangeHiddenWeights[j][i];

HiddenWeights[j][i] += ChangeHiddenWeights[j][i] ;

}

}

/******************************************************************

* Update Hidden-->Output Weights

******************************************************************/

for( i = 0 ; i < OutputNodes ; i ++ ) {

ChangeOutputWeights[HiddenNodes][i] = LearningRate * OutputDelta[i] + Momentum * ChangeOutputWeights[HiddenNodes][i] ;

OutputWeights[HiddenNodes][i] += ChangeOutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

ChangeOutputWeights[j][i] = LearningRate * Hidden[j] * OutputDelta[i] + Momentum * ChangeOutputWeights[j][i] ;

OutputWeights[j][i] += ChangeOutputWeights[j][i] ;

}

}

}

/******************************************************************

* Every 1000 cycles send data to terminal for display

******************************************************************/

ReportEvery1000 = ReportEvery1000 - 1;

if (ReportEvery1000 == 0)

{

// Blink Led for State Info

digitalWrite(ledPin, HIGH);

delay(200);

digitalWrite(ledPin, LOW);

delay(200);

digitalWrite(ledPin, HIGH);

delay(200);

digitalWrite(ledPin, LOW);

delay(200);

DEBUG_PRINT_VALUE("TrainingCycle",TrainingCycle);

DEBUG_PRINTLN_VALUE(" Error",Error);

toTerminal();

if (TrainingCycle==1)

{

ReportEvery1000 = 999;

}

else

{

ReportEvery1000 = 1000;

}

}

/******************************************************************

* If error rate is less than pre-determined threshold then end

******************************************************************/

if( Error < Success ) break ;

}

DEBUG_PRINT_VALUE("TrainingCycle: ",TrainingCycle);

DEBUG_PRINTLN_VALUE(" Error",Error);

toTerminal();

DEBUG_PRINTLN("");

DEBUG_PRINTLN("");

DEBUG_PRINT("Training Set Solved!");

DEBUG_PRINTLN_VALUE(" Error",Error);

DEBUG_PRINTLN("");

ReportEvery1000 = 1;

// done=> set mode to run

mode = 'c';

}

void toTerminal()

{

for( p = 0 ; p < PatternCount ; p++ ) {

DEBUG_PRINTLN(" ");

DEBUG_PRINT_VALUE(" Training Pattern",p);

DEBUG_PRINT(" Input: ");

for( i = 0 ; i < InputNodes ; i++ ) {

DEBUG_PRINT(Input[p][i]);

DEBUG_PRINT(" ");

}

DEBUG_PRINT(" Target: ");

for( i = 0 ; i < OutputNodes ; i++ ) {

DEBUG_PRINT(Target[p][i]);

DEBUG_PRINT(" ");

}

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = HiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

Accum += Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i < OutputNodes ; i++ ) {

Accum = OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0/(1.0 + exp(-Accum)) ;

}

DEBUG_PRINT(" Output ");

for( i = 0 ; i < OutputNodes ; i++ ) {

DEBUG_PRINT(Output[i]);

DEBUG_PRINT(" ");

}

}

}

/******************************************************************************

/* Funktion: Drive

/******************************************************************************/

void drive( int left, int right)

{

int lstate = LOW;

int rstate = LOW;

left = constrain(left,-255,255);

right = constrain(right,-255,255);

// invert left

left = left * -1;

// invert right

//right = right * -1;

if (left >= 0)

{

// remap the motor value to the calibrated interval

// speed is alwas handled in the range of [-255..255]

left = map(left,0,255,0,MTLF);

}

else

{

lstate = HIGH;

left = 255 - map(-left,0,255,0,MTLB);

}

if (right >= 0)

{

right = map(right,0,255,0,MTRF);

}

else

{

rstate = HIGH;

right = 255 - map(-right,0,255,0,MTRB);

}

analogWrite(MSL, left);

digitalWrite(MDL, lstate);

analogWrite(MSR, right);

digitalWrite(MDR, rstate);

}

/******************************************************************************

* Funktion: irRead (a,b)

* a: Pinnummer des Ir Empfängers

* b: Pinnummer des Ir Senders

* c: modedeb: Space = Normalmodus returns HIGH if > DIST

* d = Distancemodus return Distance 0 to 100 mm

* Retourwert: HIGH = IR-Licht detektiert

* d.h. gemessener Wert unterscheidet sich zum Umgebungslicht

******************************************************************************/

int irRead(int readPin, int triggerPin, char modedeb)

{

boolean zustand;

int umgebungswert = 0;

int irwert =0;

float uLichtzuIr;

for (int i=0;i<10;i++) {

digitalWrite(triggerPin, LOW);

delay(10);

umgebungswert = umgebungswert + analogRead(readPin);

digitalWrite(triggerPin, HIGH);

delay(10);

irwert = irwert + analogRead(readPin);

}

// Remove Umgebungswert

irwert = irwert - umgebungswert;

// detect obstacle

if(irwert>DIST){

zustand = HIGH;

}else{

zustand = LOW;

}

// for Debug

if(protsta && zustand || modedeb == 'd'){

delay(10);

if(modedeb == 'd'){

irwert = map(irwert, 0, 300, 100, -100);

};

return irwert;

} else {

return zustand;

};

}

Hier seht ihr das Video vom Freaky Bot. Er lernt zuerst und läuft dann mit der Hilfe vom gelernten Neuronalen Netzwerk einem Objekt hinterher.

Letzte Aktualisierung: © boxtec internet appliances · the better security products